萬盛學電腦網 >> 數據庫 >> mysql教程 >> Linux高可用(HA)之Corosync+Pacemaker+DRBD+MySQL/MariaDB實現高可用MySQ/MariaDB集群

Linux高可用(HA)之Corosync+Pacemaker+DRBD+MySQL/MariaDB實現高可用MySQ/MariaDB集群

Corosync:它屬於OpenAIS(開放式應用接口規范)中的一個項目corosync一版本中本身不具 備投票功能,到了corosync 2.0之後引入了votequorum子系統也具備了投票功能了,如果我們用的是1版本的,又需要用到票數做決策時那該如何是好呢;當然,在紅帽上把 cman + corosync結合起來用,但是早期cman跟pacemaker沒法結合起來,如果想用pacemaker又想用投票功能的話,那就把cman當成 corosync的插件來用,把cman當成corodync的投票功能,當然,這裡結合了兩個了Messaging Lader;Corosync目前有兩個主流的版本:一個是2系列的,另一個是1系列的穩定版;2版本和1版本差別很大,1版本不具有投票功能,2版本之 後引入了votequorum後支持投票功能了;OpenAIS自從誕生之後,紅帽就基於這個規范研發了一個高可用集群的解決方案叫cman,並為cman提供了rgmangaer作為資源管理器,並且容合conga全生命周期的管理接口形成了RHCS;

Conrosync是從OpenAIS這個大項目中分支出來的一個項目,而Pacemaker是heartbeat v3版本中分裂出來專門用於提供高可用集群CRM的組件,功能十分強大, Coreosync在傳遞信息的時候可以通過一個簡單的配置文件來定義信息傳遞的方式和協議等,Corosync可以提供一個完整的HA功 能,Corosync是未來的發展方向,在以後的新項目裡,一般采用Corosync,而heartbeat_gui可以提供很好的HA管理功能,可以實 現圖形化的管理。

Pacemaker是一個集群管理器。它利用首選集群基礎設施(OpenAIS 或heartbeat)提供的消息和成員能力,由輔助節點和系統進行故障檢測和回收,實現性群集服務(亦稱資源)的高可用性。

DRBD:DRBD (Distributed Replicated Block Device) 是 Linux 平台上的分散式儲存系統。其中包含了核心模組,數個使用者空間管理程式及 shell scripts,通常用於高可用性(high availability, HA)叢集。DRBD 類似磁盤陣列的RAID 1(鏡像),只不過 RAID 1 是在同一台電腦內,而 DRBD 是透過網絡。

二、環境准備

(由於實驗的時候,在最後資源定義的時候出了點錯,導致測試機全部重新系統了,博文有些地方可能文字記錄和截圖有不符的地方,請以文字或者代碼記錄為准)

1、操作系統 & IP

CentOS 6.6 x86_64

node1 172.16.6.100 Legion100.dwhd.org

node2 172.16.6.101 Legion101.dwhd.org

vip 172.16.7.200

2、軟件版本

corosync-1.4.7-1.el6.x86_64

crmsh-2.1-1.6.x86_64

pacemaker-1.1.12+git20140723.483f48a-1.1.x86_64

drbd84-utils-8.9.2-1.el6.elrepo.x86_64

kmod-drbd84-8.4.6-1.el6.elrepo.x86_64

mariadb-10.0.19

3、配置node1 node2時間同步、ssh互相、主機名互解

[root@Legion100 ~]# echo "172.16.6.100 Legion100.dwhd.org Legion100 node1" >> /etc/hosts [root@Legion100 ~]# echo "172.16.6.101 Legion101.dwhd.org Legion101 node2" >> /etc/hosts [root@Legion100 ~]# echo "*/5 * * * * /usr/sbin/ntpdate pool.ntp.org >/dev/null 2>&1" >> /var/spool/cron/root [root@Legion100 ~]# ssh-keygen -t rsa -f ~/.ssh/id_rsa -P '' Generating public/private rsa key pair. Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub. The key fingerprint is: 4f:b8:f6:ab:5b:17:d0:61:f2:09:a9:0a:f1:d7:39:84 [email protected] The key's randomart image is: +--[ RSA 2048]----+ | .o.o | | . E o* o | | o +..+ | | . . o.+. | | . oS ... | | . + . | | o o . | | . o . | | ooo. | +-----------------+ [root@Legion100 ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub [email protected] The authenticity of host 'Legion101.dwhd.org (172.16.6.101)' can't be established. RSA key fingerprint is e9:95:aa:7f:39:5b:52:a7:9b:5e:fe:98:19:82:14:e3. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'Legion101.dwhd.org,172.16.6.101' (RSA) to the list of known hosts. [email protected]'s password: Now try logging into the machine, with "ssh '[email protected]'", and check in: .ssh/authorized_keys to make sure we haven't added extra keys that you weren't expecting. [root@Legion100 ~]#

[root@Legion101 ~]# echo "172.16.6.101 Legion101.dwhd.org Legion101 node2" >> /etc/hosts [root@Legion101 ~]# echo "172.16.6.100 Legion100.dwhd.org Legion100 node1" >> /etc/hosts [root@Legion101 ~]# echo "*/5 * * * * /usr/sbin/ntpdate pool.ntp.org >/dev/null 2>&1" >> /var/spool/cron/root [root@Legion101 ~]# ssh-keygen -t rsa -f ~/.ssh/id_rsa -P '' Generating public/private rsa key pair. Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub. The key fingerprint is: c0:51:c6:23:c9:ed:04:d9:58:22:47:f0:1b:19:af:cd [email protected] The key's randomart image is: +--[ RSA 2048]----+ | o+B@+ | | =*B* | | *+.. | | B. | | o E | | | | | | | | | +-----------------+ [root@Legion101 ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub [email protected] The authenticity of host 'Legion100.dwhd.org (172.16.6.100)' can't be established. RSA key fingerprint is 90:26:f4:28:31:04:03:6c:9f:ec:e4:09:04:32:92:ee. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'Legion100.dwhd.org,172.16.6.100' (RSA) to the list of known hosts. [email protected]'s password: Now try logging into the machine, with "ssh '[email protected]'", and check in: .ssh/authorized_keys to make sure we haven't added extra keys that you weren't expecting. [root@Legion101 ~]#

三、高可用環境搭建

1、Corosync 安裝與配置

1)、node1

[root@Legion100 ~]# yum install corosync pacemaker python-dateutil python-lxml pssh -y [root@Legion100 ~]# cd /etc/corosync/ [root@Legion100 /etc/corosync]# ls corosync.conf.example corosync.conf.example.udpu service.d uidgid.d [root@Legion100 /etc/corosync]# cp -a corosync.conf.example corosync.conf [root@Legion100 /etc/corosync]# grep -Ev '^(\s+)?#|^$' corosync.conf #參考配置 compatibility: whitetank totem { version: 2 secauth: on threads: 0 interface { ringnumber: 0 bindnetaddr: 172.16.0.0 mcastaddr: 225.172.16.6 mcastport: 5405 ttl: 1 } } logging { fileline: off to_stderr: no to_logfile: yes logfile: /var/log/cluster/corosync.log to_syslog: no debug: off timestamp: on logger_subsys { subsys: AMF debug: off } } amf { mode: disabled } service { ver: 0 name: pacemaker } aisexec { user: root group: root } [root@Legion100 /etc/corosync]# corosync-keygen #生成密匙文件 Corosync Cluster Engine Authentication key generator. Gathering 1024 bits for key from /dev/random. Press keys on your keyboard to generate entropy. Writing corosync key to /etc/corosync/authkey. [root@Legion100 /etc/corosync]# ls -l /etc/corosync/ 總用量 24 -r-------- 1 root root 128 5月 30 01:43 authkey -rw-r--r-- 1 root root 2869 5月 30 01:42 corosync.conf -rw-r--r-- 1 root root 2663 10月 15 2014 corosync.conf.example -rw-r--r-- 1 root root 1073 10月 15 2014 corosync.conf.example.udpu drwxr-xr-x 2 root root 4096 10月 15 2014 service.d drwxr-xr-x 2 root root 4096 10月 15 2014 uidgid.d [root@Legion100 /etc/corosync]# file /etc/corosync/authkey /etc/corosync/authkey: data [root@Legion100 /etc/corosync]#

2)、node2

[root@Legion101 ~]# yum install corosync pacemaker python-dateutil python-lxml pssh -y [root@Legion101 ~]# scp Legion100:/etc/corosync/{corosync.conf,authkey} /etc/corosync/ The authenticity of host 'Legion100 (172.16.6.100)' can't be established. RSA key fingerprint is fd:ed:c3:bf:ed:3a:62:d3:73:18:9c:b6:3b:b8:37:f4. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'Legion100' (RSA) to the list of known hosts. corosync.conf 100% 702 0.7KB/s 00:00 authkey 100% 128 0.1KB/s 00:00 [root@Legion101 ~]#

2、安裝crmsh

crmsh下載地址:

http://download.opensuse.org/repositories/network:/ha-clustering:/Stable/ 請用自己對應的版本

1)、node1

[root@Legion100 ~]# wget http://www.dwhd.org/wp-content/uploads/2015/05/crmsh-2.1-1.6.x86_64.rpm [root@Legion100 ~]# yum install crmsh-2.1-1.6.x86_64.rpm [root@Legion100 ~]# crm crm(live)# help ^OHelp overview for crmsh ^O Available topics: Overview^O Available help topics and commands Topics^O Available help topics Description^O Program description CommandLine^O Command line options Introduction^O User interface Introcution^O Tab completion Features^O Syntax: Attribute references Reference^O Command reference Available commands: cd^O Navigate the level structure help^O Show help (help topics for list of topics) ls^O List levels and commands quit^O Exit the interactive shell report^O Create cluster status report status^O Cluster status up^O Go back to previous level cib^O/ CIB shadow management commit^O Copy a shadow CIB to the cluster delete^O Delete a shadow CIB diff^O Diff between the shadow CIB and the live CIB import^O Import a CIB or PE input file to a shadow crm(live)# quit bye [root@Legion100 ~]#

crmsh-2.1-1.6.x86_64

2)、node2

[root@Legion101 ~]# cd [root@Legion101 ~]# wget http://www.dwhd.org/wp-content/uploads/2015/05/crmsh-2.1-1.6.x86_64.rpm [root@Legion101 ~]# yum install crmsh-2.1-1.6.x86_64.rpm [root@Legion101 ~]# crm crm(live)# help ^OHelp overview for crmsh ^O Available topics: Overview^O Available help topics and commands Topics^O Available help topics Description^O Program description CommandLine^O Command line options Introduction^O User interface Introcution^O Tab completion Features^O Syntax: Attribute references Reference^O Command reference Available commands: cd^O Navigate the level structure help^O Show help (help topics for list of topics) ls^O List levels and commands quit^O Exit the interactive shell report^O Create cluster status report status^O Cluster status up^O Go back to previous level cib^O/ CIB shadow management commit^O Copy a shadow CIB to the cluster delete^O Delete a shadow CIB diff^O Diff between the shadow CIB and the live CIB crm(live)# quit bye [root@Legion101 ~]#

3、啟動corosync

(注,在配置corosync時,將pacemaker整合進corosync中,corosync啟動的同時也會啟動pacemaker)

[root@Legion100 ~]# service corosync start Starting Corosync Cluster Engine (corosync): [確定] [root@Legion100 ~]# ssh 172.16.6.101 "service corosync start" Starting Corosync Cluster Engine (corosync): [確定] [root@Legion100 ~]#

4、查看啟動信息

1)、查看corosync引擎是否正常啟動

[root@Legion100 ~]# grep -E "Corosync Cluster Engine|configuration file" /var/log/cluster/corosync.log May 30 02:43:22 corosync [MAIN ] Corosync Cluster Engine ('1.4.7'): started and ready to provide service. May 30 02:43:22 corosync [MAIN ] Successfully read main configuration file '/etc/corosync/corosync.conf'. [root@Legion100 ~]# ssh [email protected] "grep -E 'Corosync Cluster Engine|configuration file' /var/log/cluster/corosync.log" May 30 02:43:44 corosync [MAIN ] Corosync Cluster Engine ('1.4.7'): started and ready to provide service. May 30 02:43:44 corosync [MAIN ] Successfully read main configuration file '/etc/corosync/corosync.conf'. [root@Legion100 ~]#

2)、查看初始化成員節點通知是否正常發出

[root@Legion100 ~]# grep 'TOTEM' /var/log/cluster/corosync.log May 30 02:43:22 corosync [TOTEM ] Initializing transport (UDP/IP Multicast). May 30 02:43:22 corosync [TOTEM ] Initializing transmit/receive security: libtomcrypt SOBER128/SHA1HMAC (mode 0). May 30 02:43:22 corosync [TOTEM ] The network interface [172.16.6.100] is now up. May 30 02:43:22 corosync [TOTEM ] A processor joined or left the membership and a new membership was formed. May 30 02:43:44 corosync [TOTEM ] A processor joined or left the membership and a new membership was formed. [root@Legion100 ~]# ssh [email protected] "grep 'TOTEM' /var/log/cluster/corosync.log" May 30 02:43:44 corosync [TOTEM ] Initializing transport (UDP/IP Multicast). May 30 02:43:44 corosync [TOTEM ] Initializing transmit/receive security: libtomcrypt SOBER128/SHA1HMAC (mode 0). May 30 02:43:44 corosync [TOTEM ] The network interface [172.16.6.101] is now up. May 30 02:43:44 corosync [TOTEM ] A processor joined or left the membership and a new membership was formed. May 30 02:43:44 corosync [TOTEM ] A processor joined or left the membership and a new membership was formed. [root@Legion100 ~]#

3)、檢查啟動過程中是否有錯誤產生

[root@Legion100 ~]# grep 'ERROR:' /var/log/cluster/corosync.log [root@Legion100 ~]# ssh [email protected] "grep 'ERROR:' /var/log/cluster/corosync.log"

4)、查看pacemaker是否正常啟動

[root@Legion100 ~]# grep 'pcmk_startup' /var/log/cluster/corosync.log May 30 02:43:22 corosync [pcmk ] info: pcmk_startup: CRM: Initialized May 30 02:43:22 corosync [pcmk ] Logging: Initialized pcmk_startup May 30 02:43:22 corosync [pcmk ] info: pcmk_startup: Maximum core file size is: 18446744073709551615 May 30 02:43:22 corosync [pcmk ] info: pcmk_startup: Service: 9 May 30 02:43:22 corosync [pcmk ] info: pcmk_startup: Local hostname: Legion100.dwhd.org [root@Legion100 ~]# ssh [email protected] "grep 'pcmk_startup' /var/log/cluster/corosync.log" May 30 02:43:44 corosync [pcmk ] info: pcmk_startup: CRM: Initialized May 30 02:43:44 corosync [pcmk ] Logging: Initialized pcmk_startup May 30 02:43:44 corosync [pcmk ] info: pcmk_startup: Maximum core file size is: 18446744073709551615 May 30 02:43:44 corosync [pcmk ] info: pcmk_startup: Service: 9 May 30 02:43:44 corosync [pcmk ] info: pcmk_startup: Local hostname: Legion101.dwhd.org [root@Legion100 ~]#

5、查看集群狀態

[root@Legion100 ~]# crm status Last updated: Sat May 30 03:00:41 2015 Last change: Sat May 30 02:44:06 2015 Stack: classic openais (with plugin) Current DC: Legion100.dwhd.org - partition with quorum Version: 1.1.12-1.1.12+git20140723.483f48a 2 Nodes configured, 2 expected votes 0 Resources configured Online: [ Legion100.dwhd.org Legion101.dwhd.org ] [root@Legion100 ~]#

四、DRBD 安裝與配置

1安裝DRBD

1)、node1

[root@Legion100 ~]# rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org [root@Legion100 ~]# rpm -Uvh http://www.elrepo.org/elrepo-release-6-6.el6.elrepo.noarch.rpm [root@Legion100 ~]# yum install drbd84 kmod-drbd84 -y [root@Legion100 ~]# chkconfig --add drbd [root@Legion100 ~]# chkconfig drbd on [root@Legion100 ~]# chkconfig --list drbd drbd 0:關閉 1:關閉 2:啟用 3:啟用 4:啟用 5:啟用 6:關閉 [root@Legion100 ~]#

2)、node2

[root@Legion101 ~]# rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org [root@Legion101 ~]# rpm -Uvh http://www.elrepo.org/elrepo-release-6-6.el6.elrepo.noarch.rpm [root@Legion101 ~]# yum install drbd84 kmod-drbd84 -y [root@Legion101 ~]# chkconfig drbd on [root@Legion101 ~]# chkconfig --list drbd drbd 0:關閉 1:關閉 2:啟用 3:啟用 4:啟用 5:啟用 6:關閉 [root@Legion101 ~]#

2、配置DRBD

[root@Legion101 /tmp]# cd /etc/drbd.d/ [root@Legion101 /etc/drbd.d]# grep -Ev '^(\s+)?#|^$' global_common.conf global { usage-count yes; #讓linbit公司收集目前drbd的使用情況,yes為參加 } common { handlers { pri-on-incon-degr "/usr/lib/drbd/notify-pri-on-incon-degr.sh; /usr/lib/drbd/notify-emergency-reboot.sh; echo b > /proc/sysrq-trigger ; reboot -f"; pri-lost-after-sb "/usr/lib/drbd/notify-pri-lost-after-sb.sh; /usr/lib/drbd/notify-emergency-reboot.sh; echo b > /proc/sysrq-trigger ; reboot -f"; local-io-error "/usr/lib/drbd/notify-io-error.sh; /usr/lib/drbd/notify-emergency-shutdown.sh; echo o > /proc/sysrq-trigger ; halt -f"; } startup { } options { } disk { on-io-error detach; #同步錯誤的做法是分離 } net { cram-hmac-alg "sha1"; #設置加密算法sha1 shared-secret "YzYxMDc1ZGYxYWU4"; #設置加密key } } [root@Legion101 /etc/drbd.d]#

3、添加資源&同步配置

[root@Legion101 /etc/drbd.d]# cat mariadb.res resource mariadb { on Legion100.dwhd.org { device /dev/drbd0; disk /dev/sdb1; address 172.16.6.100:7789; meta-disk internal; } on Legion101.dwhd.org { device /dev/drbd0; disk /dev/sdb1; address 172.16.6.101:7789; meta-disk internal; } } [root@Legion101 /etc/drbd/drbd.d]# scp global_common.conf mariadb.res node1:/etc/drbd.d/ global_common.conf 100% 2461 2.4KB/s 00:00 mariadb.res 100% 282 0.3KB/s 00:00 [root@Legion101 /etc/drbd/drbd.d]#

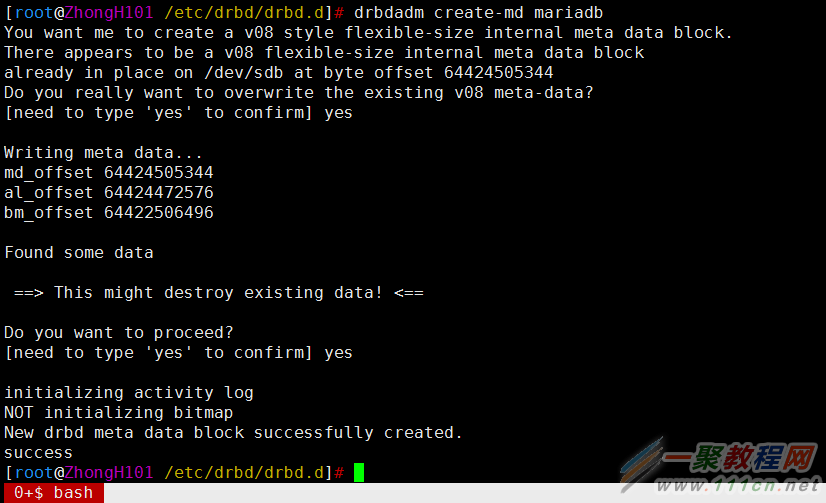

4、初始化node1和node2上的資源

[root@Legion100 /tmp/drbd-8.4.3/drbd]# drbdadm create-md mariadb You want me to create a v08 style flexible-size internal meta data block. There appears to be a v08 flexible-size internal meta data block already in place on /dev/sdb at byte offset 64424505344 Do you really want to overwrite the existing v08 meta-data? [need to type 'yes' to confirm] yes Writing meta data... md_offset 64424505344 al_offset 64424472576 bm_offset 64422506496 Found some data ==> This might destroy existing data! <== Do you want to proceed? [need to type 'yes' to confirm] yes initializing activity log NOT initializing bitmap New drbd meta data block successfully created. success [root@Legion100 /tmp/drbd-8.4.3/drbd]#

[root@Legion101 /etc/drbd/drbd.d]# drbdadm create-md mariadb You want me to create a v08 style flexible-size internal meta data block. There appears to be a v08 flexible-size internal meta data block already in place on /dev/sdb at byte offset 64424505344 Do you really want to overwrite the existing v08 meta-data? [need to type 'yes' to confirm] yes Writing meta data... md_offset 64424505344 al_offset 64424472576 bm_offset 64422506496 Found some data ==> This might destroy existing data! <== Do you want to proceed? [need to type 'yes' to confirm] yes initializing activity log NOT initializing bitmap New drbd meta data block successfully created. success [root@Legion101 /etc/drbd/drbd.d]#

5、啟動DRBD

[root@Legion100 /tmp/drbd-8.4.3/drbd]# /etc/init.d/drbd start Starting DRBD resources: [ create res: mariadb prepare disk: mariadb adjust disk: mariadb adjust net: mariadb ] .......... *************************************************************** DRBD's startup script waits for the peer node(s) to appear. - In case this node was already a degraded cluster before the reboot the timeout is 0 seconds. [degr-wfc-timeout] - If the peer was available before the reboot the timeout will expire after 0 seconds. [wfc-timeout] (These values are for resource 'mariadb'; 0 sec -> wait forever) To abort waiting enter 'yes' [ 14]: yes . [root@Legion100 /tmp/drbd-8.4.3/drbd]#

[root@Legion101 /etc/drbd/drbd.d]# /etc/init.d/drbd start Starting DRBD resources: [ create res: mariadb prepare disk: mariadb adjust disk: mariadb adjust net: mariadb ] . [root@Legion101 /etc/drbd/drbd.d]#

6、查看drbd狀態

[root@Legion100 ~]# drbd-overview 0:mariadb/0 Connected Secondary/Secondary Inconsistent/Inconsistent C r----- [root@Legion100 ~]# ssh [email protected] "drbd-overview" 0:mariadb/0 Connected Secondary/Secondary Inconsistent/Inconsistent C r----- [root@Legion100 ~]#

7、設置node1為主節點

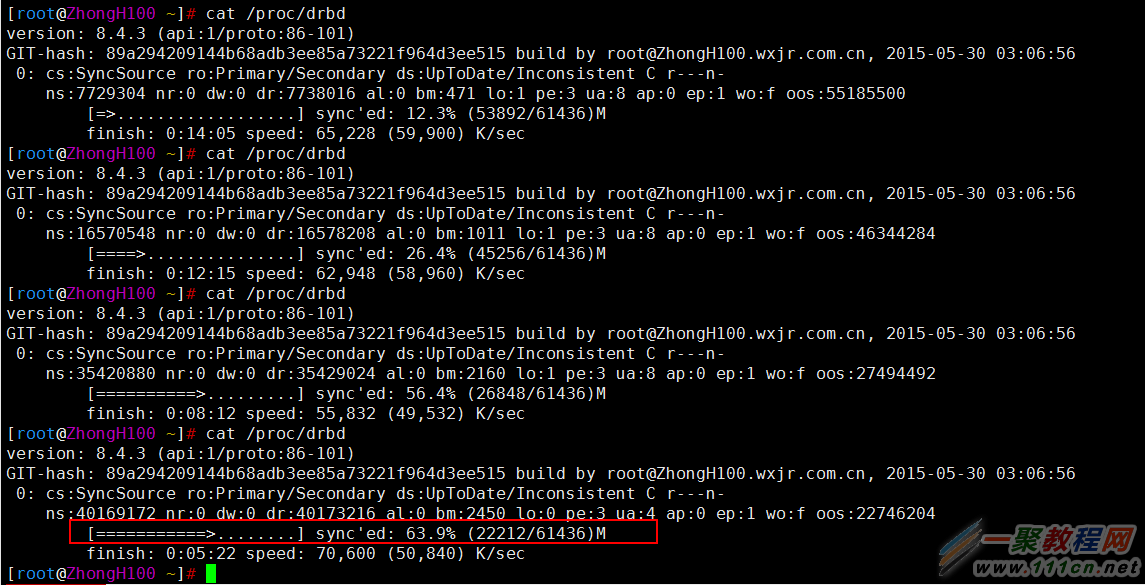

[root@Legion100 ~]# drbdadm -- --overwrite-data-of-peer primary mariadb [root@Legion100 ~]# drbd-overview 0:mariadb/0 SyncSource Primary/Secondary UpToDate/Inconsistent C r----- [>....................] sync'ed: 0.2% (61364/61436)M [root@Legion100 ~]# ssh [email protected] "drbd-overview" 0:mariadb/0 SyncTarget Secondary/Primary Inconsistent/UpToDate C r----- [>....................] sync'ed: 0.4% (61212/61436)M [root@Legion100 ~]# cat /proc/drbd version: 8.4.3 (api:1/proto:86-101) GIT-hash: 89a294209144b68adb3ee85a73221f964d3ee515 build by [email protected], 2015-05-30 03:06:56 0: cs:SyncSource ro:Primary/Secondary ds:UpToDate/Inconsistent C r---n- ns:1750940 nr:0 dw:0 dr:1754784 al:0 bm:106 lo:0 pe:2 ua:4 ap:0 ep:1 wo:f oos:61163612 [>....................] sync'ed: 2.8% (59728/61436)M finish: 0:16:11 speed: 62,976 (54,656) K/sec [root@Legion100 ~]# drbdadm role mariadb Primary/Secondary [root@Legion100 ~]#

8、格式化並掛載

當下圖的進度跑完就可以了,切記

[root@Legion100 ~]# mke2fs -t ext4 -L drbdMariaDB /dev/drbd0 [root@Legion100 ~]# mkdir /data [root@Legion100 ~]# mount /dev/drbd0 /data/ [root@Legion100 ~]# mount /dev/mapper/vgzhongH-root on / type ext4 (rw,acl) proc on /proc type proc (rw) sysfs on /sys type sysfs (rw) devpts on /dev/pts type devpts (rw,gid=5,mode=620) tmpfs on /dev/shm type tmpfs (rw) /dev/sda1 on /boot type ext4 (rw) /dev/mapper/vgzhongH-data on /data type ext4 (rw,acl) none on /proc/sys/fs/binfmt_misc type binfmt_misc (rw) /dev/drbd0 on /data type ext4 (rw) [root@Legion100 ~]# df -hP Filesystem Size Used Avail Use% Mounted on /dev/mapper/vgzhongH-root 30G 3.4G 25G 13% / tmpfs 932M 37M 895M 4% /dev/shm /dev/sda1 477M 34M 418M 8% /boot /dev/mapper/vgzhongH-data 59G 52M 56G 1% /data /dev/drbd0 59G 52M 56G 1% /data [root@Legion100 ~]#

五、安裝數據庫MariaDB

1、node1

[root@Legion100 ~]# groupadd -g 1500 mysql && useradd -g mysql -u 1500 -s /sbin/nologin -M mysql [root@Legion100 ~]# cd /tmp/ && wget http://www.dwhd.org/wp-content/uploads/2015/05/cmake-3.2.2.tar.gz [root@Legion101 /tmp]# tar xf cmake-3.2.2.tar.gz [root@Legion100 /tmp]# cd cmake-3.2.2 [root@Legion100 /tmp/cmake-3.2.2]# ./bootstrap [root@Legion100 /tmp/cmake-3.2.2]# [ "$?" = "0" ] && make && make install && which cmake && cd ../ /usr/local/bin/cmake [root@Legion100 /tmp]# wget "https://downloads.mariadb.org/interstitial/mariadb-10.0.19/source/mariadb-10.0.19.tar.gz/from/http%3A//mirrors.opencas.cn/mariadb" -O mariadb-10.0.19.tar.gz [root@Legion100 /tmp]# tar xf mariadb-10.0.19.tar.gz [root@Legion100 /tmp]# cd mariadb-10.0.19 [root@Legion100 /tmp/mariadb-10.0.19]# cmake . -DCMAKE_INSTALL_PREFIX=/usr/local/mysql \ -DMYSQL_DATADIR=/data/mysql \ -DWITH_SSL=system \ -DWITH_INNOBASE_STORAGE_ENGINE=1 \ -DWITH_ARCHIVE_STORAGE_ENGINE=1 \ -DWITH_BLACKHOLE_STORAGE_ENGINE=1 \ -DWITH_SPHINX_STORAGE_ENGINE=1 \ -DWITH_ARIA_STORAGE_ENGINE=1 \ -DWITH_XTRADB_STORAGE_ENGINE=1 \ -DWITH_PARTITION_STORAGE_ENGINE=1 \ -DWITH_FEDERATEDX_STORAGE_ENGINE=1 \ -DWITH_MYISAM_STORAGE_ENGINE=1 \ -DWITH_PERFSCHEMA_STORAGE_ENGINE=1 \ -DWITH_EXTRA_CHARSETS=all \ -DWITH_EMBEDDED_SERVER=1 \ -DWITH_READLINE=1 \ -DWITH_ZLIB=system \ -DWITH_LIBWRAP=0 \ -DEXTRA_CHARSETS=all \ -DENABLED_LOCAL_INFILE=1 \ -DMYSQL_UNIX_ADDR=/tmp/mysql.sock \ -DDEFAULT_CHARSET=utf8 \ -DDEFAULT_COLLATION=utf8_general_ci [root@Legion100 /tmp/mariadb-10.0.19]# make -j $(awk '/processor/{i++}END{print i}' /proc/cpuinfo) && make install && echo $? [root@Legion100 /tmp/mariadb-10.0.19]# cd /usr/local/mysql/ [root@Legion100 /usr/local/mysql]# echo "export PATH=/usr/local/mysql/bin:\$PATH" > /etc/profile.d/mariadb10.0.19.sh [root@Legion100 /usr/local/mysql]# . /etc/profile.d/mariadb10.0.19.sh [root@Legion100 /usr/local/mysql]# cp -a support-files/mysql.server /etc/rc.d/init.d/mysqld [root@Legion100 /usr/local/mysql]# \cp support-files/my-large.cnf /etc/my.cnf [root@Legion100 /usr/local/mysql]# sed -i '/query_cache_size/a datadir = /data/mysql' /etc/my.cnf [root@Legion100 /usr/local/mysql]# mkdir -p /data/mysql [root@Legion100 /usr/local/mysql]# chown -R mysql.mysql /data/mysql [root@Legion100 /usr/local/mysql]# /usr/local/mysql/scripts/mysql_install_db --user=mysql --datadir=/data/mysql/ --basedir=/usr/local/mysql [root@Legion100 /usr/local/mysql]# scp /etc/rc.d/init.d/mysqld 172.16.6.101:/etc/rc.d/init.d/mysqld mysqld 100% 12KB 11.9KB/s 00:00 [root@Legion100 /usr/local/mysql]# scp /etc/my.cnf 172.16.6.101:/etc/ my.cnf 100% 4903 4.8KB/s 00:00 [root@Legion100 /usr/local/mysql]# scp /tmp/{cmake-3.2.2.tar.gz,mariadb-10.0.19.tar.gz} 172.16.6.101:/tmp cmake-3.2.2.tar.gz 100% 6288KB 6.1MB/s 00:00 mariadb-10.0.19.tar.gz 100% 54MB 53.6MB/s 00:01 [root@Legion100 /usr/local/mysql]#

2、node2

[root@Legion101 /etc/drbd/drbd.d]# groupadd -g 1500 mysql && useradd -g mysql -u 1500 -s /sbin/nologin -M mysql [root@Legion101 /etc/drbd/drbd.d]# cd /tmp/ [root@Legion101 /tmp]# tar xf cmake-3.2.2.tar.gz [root@Legion101 /tmp]# cd cmake-3.2.2 [root@Legion101 /tmp/cmake-3.2.2]# ./bootstrap [root@Legion101 /tmp/cmake-3.2.2]# [ "$?" = "0" ] && make && make install && which cmake && cd ../ [root@Legion101 /tmp]# tar xf mariadb-10.0.19.tar.gz [root@Legion101 /tmp]# cd mariadb-10.0.19 [root@Legion101 /tmp/mariadb-10.0.19]# cmake . -DCMAKE_INSTALL_PREFIX=/usr/local/mysql \ -DMYSQL_DATADIR=/data/mysql \ -DWITH_SSL=system \ -DWITH_INNOBASE_STORAGE_ENGINE=1 \ -DWITH_ARCHIVE_STORAGE_ENGINE=1 \ -DWITH_BLACKHOLE_STORAGE_ENGINE=1 \ -DWITH_SPHINX_STORAGE_ENGINE=1 \ -DWITH_ARIA_STORAGE_ENGINE=1 \ -DWITH_XTRADB_STORAGE_ENGINE=1 \ -DWITH_PARTITION_STORAGE_ENGINE=1 \ -DWITH_FEDERATEDX_STORAGE_ENGINE=1 \ -DWITH_MYISAM_STORAGE_ENGINE=1 \ -DWITH_PERFSCHEMA_STORAGE_ENGINE=1 \ -DWITH_EXTRA_CHARSETS=all \ -DWITH_EMBEDDED_SERVER=1 \ -DWITH_READLINE=1 \ -DWITH_ZLIB=system \ -DWITH_LIBWRAP=0 \ -DEXTRA_CHARSETS=all \ -DENABLED_LOCAL_INFILE=1 \ -DMYSQL_UNIX_ADDR=/tmp/mysql.sock \ -DDEFAULT_CHARSET=utf8 \ -DDEFAULT_COLLATION=utf8_general_ci [root@Legion101 /tmp/mariadb-10.0.19]# make -j $(awk '/processor/{i++}END{print i}' /proc/cpuinfo) && make install && echo $? [root@Legion101 /usr/local/mysql]# echo "export PATH=/usr/local/mysql/bin:\$PATH" > /etc/profile.d/mariadb10.0.19.sh [root@Legion101 /usr/local/mysql]# . /etc/profile.d/mariadb10.0.19.sh

3、確保node1和node2上mysql用戶UID、GID一致

[root@Legion100 /usr/local/mysql]# id mysql uid=1500(mysql) gid=1500(mysql) 組=1500(mysql) [root@Legion100 /usr/local/mysql]#

[root@Legion101 /tmp/mariadb-10.0.19]# id mysql uid=1500(mysql) gid=1500(mysql) 組=1500(mysql) [root@Legion101 /tmp/mariadb-10.0.19]#

4、將node1的DRBD設置為主節點

[root@Legion100 /usr/local/mysql]# drbdadm primary mariadb [root@Legion100 /usr/local/mysql]# drbd-overview 0:mariadb/0 Connected Primary/Secondary UpToDate/UpToDate C r----- /data ext4 59G 162M 56G 1% [root@Legion100 /usr/local/mysql]# cat /proc/drbd version: 8.4.3 (api:1/proto:86-101) GIT-hash: 89a294209144b68adb3ee85a73221f964d3ee515 build by [email protected], 2015-05-30 03:06:56 0: cs:Connected ro:Primary/Secondary ds:UpToDate/UpToDate C r----- ns:64157908 nr:0 dw:1245304 dr:62914049 al:328 bm:3839 lo:0 pe:0 ua:0 ap:0 ep:1 wo:f oos:0 [root@Legion100 /usr/local/mysql]#

5、啟動並測試node1上的MariaDB

[root@Legion100 /usr/local/mysql]# /etc/init.d/mysqld start Starting MySQL. [確定] [root@Legion100 /usr/local/mysql]# mysql <<< "show databases;" Database information_schema mysql performance_schema test [root@Legion100 /usr/local/mysql]#

6、關閉node1上的mysql且取消開機啟動

[root@Legion100 /usr/local/mysql]# /etc/init.d/mysqld stop Shutting down MySQL... [確定] [root@Legion100 /usr/local/mysql]# chkconfig mysqld off [root@Legion100 /usr/local/mysql]# chkconfig --list mysqld mysqld 0:關閉 1:關閉 2:關閉 3:關閉 4:關閉 5:關閉 6:關閉 [root@Legion100 /usr/local/mysql]# umount /data/ [root@Legion100 /usr/local/mysql]#

7、將node1節點設置為從,node2節點上的DRBD設置為主節點並掛載

[root@Legion100 /usr/local/mysql]# drbdadm secondary mariadb [root@Legion100 /usr/local/mysql]# drbdadm role mariadb Secondary/Secondary [root@Legion100 /usr/local/mysql]#

[root@Legion101 /etc/drbd/drbd.d]# drbdadm primary mariadb [root@Legion101 /etc/drbd/drbd.d]# drbdadm role mariadb Primary/Secondary [root@Legion101 /etc/drbd/drbd.d]# mount /dev/drbd0 /data/ [root@Legion101 /etc/drbd/drbd.d]# mount /dev/mapper/vgzhongH-root on / type ext4 (rw,acl) proc on /proc type proc (rw) sysfs on /sys type sysfs (rw) devpts on /dev/pts type devpts (rw,gid=5,mode=620) tmpfs on /dev/shm type tmpfs (rw) /dev/sda1 on /boot type ext4 (rw) /dev/mapper/vgzhongH-data on /data type ext4 (rw,acl) none on /proc/sys/fs/binfmt_misc type binfmt_misc (rw) /dev/drbd0 on /data type ext4 (rw) [root@Legion101 /etc/drbd/drbd.d]# df -hP Filesystem Size Used Avail Use% Mounted on /dev/mapper/vgzhongH-root 30G 7.5G 21G 27% / tmpfs 932M 22M 910M 3% /dev/shm /dev/sda1 477M 34M 418M 8% /boot /dev/mapper/vgzhongH-data 59G 162M 56G 1% /data /dev/drbd0 59G 162M 56G 1% /data [root@Legion101 /etc/drbd/drbd.d]#

8、啟動並測試node2上的MariaDB

[root@Legion101 /etc/drbd/drbd.d]# /etc/init.d/mysqld start Starting MySQL.. [確定] [root@Legion101 /etc/drbd/drbd.d]# mysql <<< "show databases;" Database information_schema mysql performance_schema test [root@Legion101 /etc/drbd/drbd.d]#

9、關閉node2節點上的MariaDB且設置為開機不自動啟動

[root@Legion101 /etc/drbd/drbd.d]# /etc/init.d/mysqld stop Shutting down MySQL... [確定] [root@Legion101 /etc/drbd/drbd.d]# chkconfig mysqld off [root@Legion101 /etc/drbd/drbd.d]# chkconfig --list mysqld mysqld 0:關閉 1:關閉 2:關閉 3:關閉 4:關閉 5:關閉 6:關閉 [root@Legion101 ~]# umount /data/ [root@Legion101 /etc/drbd/drbd.d]#

六、crmsh 資源管理

1、關閉drbd並設置開機不啟動

[root@Legion100 ~]# /etc/init.d/drbd stop Stopping all DRBD resources: . [root@Legion100 ~]# chkconfig drbd off [root@Legion100 ~]# chkconfig --list drbd drbd 0:關閉 1:關閉 2:關閉 3:關閉 4:關閉 5:關閉 6:關閉 [root@Legion100 ~]# ssh [email protected] "/etc/init.d/drbd stop" Stopping all DRBD resources: . [root@Legion100 ~]# ssh [email protected] "chkconfig drbd off && chkconfig --list drbd" drbd 0:關閉 1:關閉 2:關閉 3:關閉 4:關閉 5:關閉 6:關閉 [root@Legion100 ~]#

2、增加drbd資源

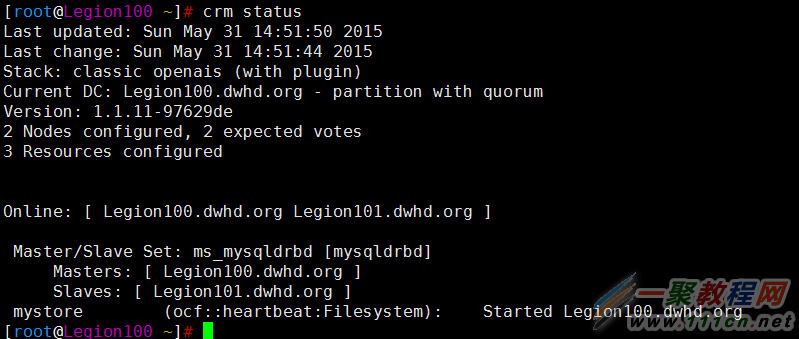

[root@Legion100 ~]# crm crm(live)# configure crm(live)configure# property stonith-enabled=false crm(live)configure# property no-quorum-policy=ignore crm(live)configure# verify crm(live)configure# commit crm(live)configure# primitive mysqldrbd ocf:linbit:drbd params drbd_resource=web op start timeout=240 op stop timeout=100 op monitor role=Master interval=20 timeout=30 op monitor role=Slave interval=30 timeout=30 crm(live)configure# verify crm(live)configure# ms ms_mysqldrbd mysqldrbd meta master-max=1 master-node-max=1 clone-max=2 clone-node-max=1 notify=true crm(live)configure# verify crm(live)configure# show node Legion100.dwhd.org node Legion101.dwhd.org primitive mysqldrbd ocf:linbit:drbd \ params drbd_resource=web \ op start timeout=240 interval=0 \ op stop timeout=100 interval=0 \ op monitor role=Master interval=20 timeout=30 \ op monitor role=Slave interval=30 timeout=30 ms ms_mysqldrbd mysqldrbd \ meta master-max=1 master-node-max=1 clone-max=2 clone-node-max=1 notify=true property cib-bootstrap-options: \ dc-version=1.1.11-97629de \ cluster-infrastructure="classic openais (with plugin)" \ expected-quorum-votes=2 \ stonith-enabled=false \ no-quorum-policy=ignore crm(live)configure# commit crm(live)configure# quit bye [root@Legion100 ~]# crm status Last updated: Sun May 31 14:45:53 2015 Last change: Sun May 31 14:45:46 2015 Stack: classic openais (with plugin) Current DC: Legion100.dwhd.org - partition with quorum Version: 1.1.11-97629de 2 Nodes configured, 2 expected votes 2 Resources configured Online: [ Legion100.dwhd.org Legion101.dwhd.org ] Master/Slave Set: ms_mysqldrbd [mysqldrbd] Masters: [ Legion101.dwhd.org ] Slaves: [ Legion100.dwhd.org ] [root@Legion100 ~]#

3、添加文件系統資源

[root@Legion100 ~]# crm configure crm(live)configure# primitive mystore ocf:heartbeat:Filesystem params device=/dev/drbd0 directory=/data fstype=ext4 op start timeout=60 op stop timeout=60 crm(live)configure# verify crm(live)configure# colocation mystore_with_ms_mysqldrbd inf: mystore ms_mysqldrbd:Master crm(live)configure# order mystore_after_ms_mysqldrbd mandatory: ms_mysqldrbd:promote mystore:start crm(live)configure# verify crm(live)configure# commit crm(live)configure# show node Legion100.dwhd.org node Legion101.dwhd.org primitive mysqldrbd ocf:linbit:drbd \ params drbd_resource=web \ op start timeout=240 interval=0 \ op stop timeout=100 interval=0 \ op monitor role=Master interval=20 timeout=30 \ op monitor role=Slave interval=30 timeout=30 primitive mystore Filesystem \ params device="/dev/drbd0" directory="/data" fstype=ext4 \ op start timeout=60 interval=0 \ op stop timeout=60 interval=0 ms ms_mysqldrbd mysqldrbd \ meta master-max=1 master-node-max=1 clone-max=2 clone-node-max=1 notify=true colocation mystore_with_ms_mysqldrbd inf: mystore ms_mysqldrbd:Master order mystore_after_ms_mysqldrbd Mandatory: ms_mysqldrbd:promote mystore:start property cib-bootstrap-options: \ dc-version=1.1.11-97629de \ cluster-infrastructure="classic openais (with plugin)" \ expected-quorum-votes=2 \ stonith-enabled=false \ no-quorum-policy=ignore crm(live)configure#

4、添加mysql資源

[root@Legion100 ~]# crm configure crm(live)configure# primitive mysqld lsb:mysqld crm(live)configure# colocation mysqld_with_mystore inf: mysqld mystore crm(live)configure# verify crm(live)configure# commit crm(live)configure# quit bye [root@Legion100 ~]# crm status Last updated: Sun May 31 14:53:44 2015 Last change: Sun May 31 14:53:39 2015 Stack: classic openais (with plugin) Current DC: Legion100.dwhd.org - partition with quorum Version: 1.1.11-97629de 2 Nodes configured, 2 expected votes 4 Resources configured Online: [ Legion100.dwhd.org Legion101.dwhd.org ] Master/Slave Set: ms_mysqldrbd [mysqldrbd] Masters: [ Legion100.dwhd.org ] Slaves: [ Legion101.dwhd.org ] mystore (ocf::heartbeat:Filesystem): Started Legion100.dwhd.org mysqld (lsb:mysqld): Started Legion100.dwhd.org [root@Legion100 ~]# crm configure crm(live)configure# order mysqld_after_mystore mandatory: mystore mysqld crm(live)configure# verify crm(live)configure# commit crm(live)configure# show node Legion100.dwhd.org node Legion101.dwhd.org primitive mysqld lsb:mysqld primitive mysqldrbd ocf:linbit:drbd \ params drbd_resource=web \ op start timeout=240 interval=0 \ op stop timeout=100 interval=0 \ op monitor role=Master interval=20 timeout=30 \ op monitor role=Slave interval=30 timeout=30 primitive mystore Filesystem \ params device="/dev/drbd0" directory="/data" fstype=ext4 \ op start timeout=60 interval=0 \ op stop timeout=60 interval=0 ms ms_mysqldrbd mysqldrbd \ meta master-max=1 master-node-max=1 clone-max=2 clone-node-max=1 notify=true colocation mysqld_with_mystore inf: mysqld mystore colocation mystore_with_ms_mysqldrbd inf: mystore ms_mysqldrbd:Master order mysqld_after_mystore Mandatory: mystore mysqld order mystore_after_ms_mysqldrbd Mandatory: ms_mysqldrbd:promote mystore:start property cib-bootstrap-options: \ dc-version=1.1.11-97629de \ cluster-infrastructure="classic openais (with plugin)" \ expected-quorum-votes=2 \ stonith-enabled=false \ no-quorum-policy=ignore crm(live)configure# quit bye [root@Legion100 ~]# crm status Last updated: Sun May 31 14:54:47 2015 Last change: Sun May 31 14:54:22 2015 Stack: classic openais (with plugin) Current DC: Legion100.dwhd.org - partition with quorum Version: 1.1.11-97629de 2 Nodes configured, 2 expected votes 4 Resources configured Online: [ Legion100.dwhd.org Legion101.dwhd.org ] Master/Slave Set: ms_mysqldrbd [mysqldrbd] Masters: [ Legion100.dwhd.org ] Slaves: [ Legion101.dwhd.org ] mystore (ocf::heartbeat:Filesystem): Started Legion100.dwhd.org mysqld (lsb:mysqld): Started Legion100.dwhd.org [root@Legion100 ~]#

5、添加vip資源

[root@Legion100 ~]# crm configure crm(live)configure# primitive myvip ocf:heartbeat:IPaddr params ip="172.16.7.200" op monitor interval=20 timeout=20 on-fail=restart crm(live)configure# verify crm(live)configure# colocation vip_with_ms_mysqldrbd inf: ms_mysqldrbd:Master vip crm(live)configure# verify crm(live)configure# commit crm(live)configure# show node Legion100.dwhd.org node Legion101.dwhd.org primitive mysqld lsb:mysqld primitive mysqldrbd ocf:linbit:drbd \ params drbd_resource=web \ op start timeout=240 interval=0 \ op stop timeout=100 interval=0 \ op monitor role=Master interval=20 timeout=30 \ op monitor role=Slave interval=30 timeout=30 primitive mystore Filesystem \ params device="/dev/drbd0" directory="/data" fstype=ext4 \ op start timeout=60 interval=0 \ op stop timeout=60 interval=0 primitive vip IPaddr \ params ip=172.16.7.200 \ op monitor interval=20 timeout=20 on-fail=restart ms ms_mysqldrbd mysqldrbd \ meta master-max=1 master-node-max=1 clone-max=2 clone-node-max=1 notify=true colocation mysqld_with_mystore inf: mysqld mystore colocation mystore_with_ms_mysqldrbd inf: mystore ms_mysqldrbd:Master colocation vip_with_ms_mysqldrbd inf: ms_mysqldrbd:Master vip order mysqld_after_mystore Mandatory: mystore mysqld order mystore_after_ms_mysqldrbd Mandatory: ms_mysqldrbd:promote mystore:start property cib-bootstrap-options: \ dc-version=1.1.11-97629de \ cluster-infrastructure="classic openais (with plugin)" \ expected-quorum-votes=2 \ stonith-enabled=false \ no-quorum-policy=ignore crm(live)configure# quit bye [root@Legion100 ~]# crm status Last updated: Sun May 31 15:16:36 2015 Last change: Sun May 31 15:16:30 2015 Stack: classic openais (with plugin) Current DC: Legion100.dwhd.org - partition with quorum Version: 1.1.11-97629de 2 Nodes configured, 2 expected votes 5 Resources configured Online: [ Legion100.dwhd.org Legion101.dwhd.org ] Master/Slave Set: ms_mysqldrbd [mysqldrbd] Masters: [ Legion100.dwhd.org ] Slaves: [ Legion101.dwhd.org ] mystore (ocf::heartbeat:Filesystem): Started Legion100.dwhd.org mysqld (lsb:mysqld): Started Legion100.dwhd.org vip (ocf::heartbeat:IPaddr): Started Legion100.dwhd.org [root@Legion100 ~]#

6、查看mysql啟動 drbd掛載 vip的情況

[root@Legion100 ~]# netstat -tnlp | grep 3306 tcp 0 0 :::3306 :::* LISTEN 81037/mysqld [root@Legion100 ~]# df -hP Filesystem Size Used Avail Use% Mounted on /dev/mapper/vgzhongH-root 30G 7.1G 21G 26% / tmpfs 932M 37M 895M 4% /dev/shm /dev/sda1 477M 34M 418M 8% /boot /dev/drbd0 4.9G 120M 4.5G 3% /data [root@Legion100 ~]# ip addr 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:e6:29:99 brd ff:ff:ff:ff:ff:ff inet 172.16.6.100/16 brd 172.16.255.255 scope global eth0 inet 172.16.7.200/16 brd 172.16.255.255 scope global secondary eth0 inet6 2001:470:24:9e2:20c:29ff:fee6:2999/64 scope global dynamic valid_lft 7190sec preferred_lft 1790sec inet6 fe80::20c:29ff:fee6:2999/64 scope link valid_lft forever preferred_lft forever 3: pan0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN link/ether 1a:54:78:d1:47:aa brd ff:ff:ff:ff:ff:ff [root@Legion100 ~]#

7、添加mysql的授權且進行遠程連接測試

[root@Legion100 ~]# mysql Welcome to the MariaDB monitor. Commands end with ; or \g. Your MariaDB connection id is 6 Server version: 10.0.19-MariaDB-log Source distribution Copyright (c) 2000, 2015, Oracle, MariaDB Corporation Ab and others. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. MariaDB [(none)]> grant all on *.* to root@"172.16.%.%" identified by "lookback"; Query OK, 0 rows affected (0.00 sec) MariaDB [(none)]> flush privileges; Query OK, 0 rows affected (0.00 sec) MariaDB [(none)]> \q Bye [root@Legion100 ~]# 1 1[root@Legion101 ~]# ip addr 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:14:0f:ea brd ff:ff:ff:ff:ff:ff inet 172.16.6.101/16 brd 172.16.255.255 scope global eth0 inet6 2001:470:24:9e2:20c:29ff:fe14:fea/64 scope global dynamic valid_lft 6950sec preferred_lft 1550sec inet6 fe80::20c:29ff:fe14:fea/64 scope link valid_lft forever preferred_lft forever 3: pan0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN link/ether f6:6d:0c:e5:58:d5 brd ff:ff:ff:ff:ff:ff [root@Legion101 ~]# mysql -uroot -plookback -h172.16.7.200 Welcome to the MariaDB monitor. Commands end with ; or \g. Your MariaDB connection id is 7 Server version: 10.0.19-MariaDB-log Source distribution Copyright (c) 2000, 2015, Oracle, MariaDB Corporation Ab and others. Type 'help;' or '\h' for help. Type '\c' to clear the current input statement. MariaDB [(none)]> show databases; +--------------------+ | Database | +--------------------+ | information_schema | | mysql | | performance_schema | | test | +--------------------+ 4 rows in set (0.08 sec) MariaDB [(none)]> \q Bye [root@Legion101 ~]#

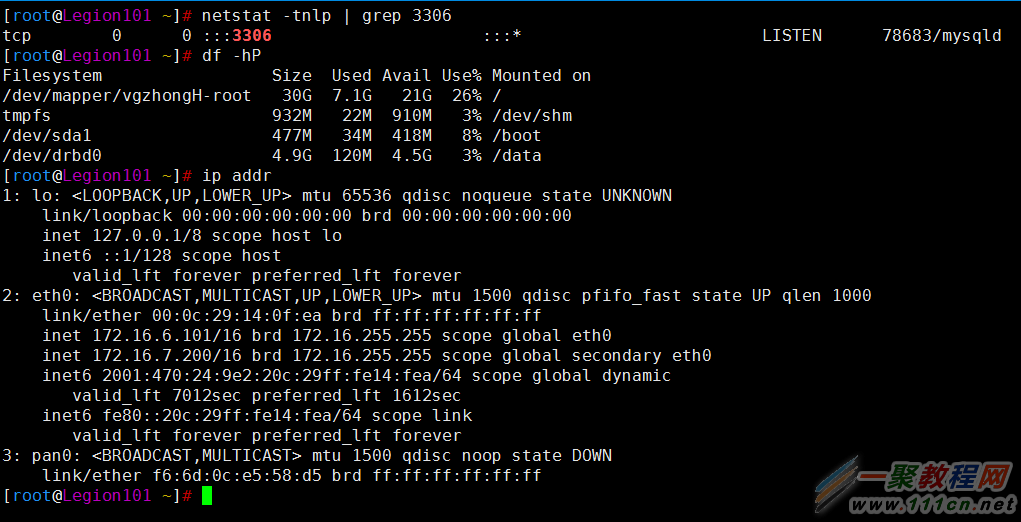

8、切換節點資源轉移

[root@Legion100 ~]# crm status Last updated: Sun May 31 15:27:52 2015 Last change: Sun May 31 15:27:49 2015 Stack: classic openais (with plugin) Current DC: Legion100.dwhd.org - partition with quorum Version: 1.1.11-97629de 2 Nodes configured, 2 expected votes 5 Resources configured Online: [ Legion100.dwhd.org Legion101.dwhd.org ] Master/Slave Set: ms_mysqldrbd [mysqldrbd] Masters: [ Legion100.dwhd.org ] #開始主節點是node1 Slaves: [ Legion101.dwhd.org ] mystore (ocf::heartbeat:Filesystem): Started Legion100.dwhd.org mysqld (lsb:mysqld): Started Legion100.dwhd.org vip (ocf::heartbeat:IPaddr): Started Legion100.dwhd.org [root@Legion100 ~]# crm node standby Legion100.dwhd.org [root@Legion100 ~]# crm status Last updated: Sun May 31 15:28:50 2015 Last change: Sun May 31 15:28:03 2015 Stack: classic openais (with plugin) Current DC: Legion100.dwhd.org - partition with quorum Version: 1.1.11-97629de 2 Nodes configured, 2 expected votes 5 Resources configured Node Legion100.dwhd.org: standby Online: [ Legion101.dwhd.org ] Master/Slave Set: ms_mysqldrbd [mysqldrbd] Masters: [ Legion101.dwhd.org ] #此時主節點已經是node2了說明轉移成功 Stopped: [ Legion100.dwhd.org ] mystore (ocf::heartbeat:Filesystem): Started Legion101.dwhd.org mysqld (lsb:mysqld): Started Legion101.dwhd.org vip (ocf::heartbeat:IPaddr): Started Legion101.dwhd.org [root@Legion100 ~]#

[root@Legion100 ~]# netstat -tnlp | grep 3306 [root@Legion100 ~]# df -hP Filesystem Size Used Avail Use% Mounted on /dev/mapper/vgzhongH-root 30G 7.1G 21G 26% / tmpfs 932M 37M 895M 4% /dev/shm /dev/sda1 477M 34M 418M 8% /boot [root@Legion100 ~]# ip addr 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:e6:29:99 brd ff:ff:ff:ff:ff:ff inet 172.16.6.100/16 brd 172.16.255.255 scope global eth0 inet6 2001:470:24:9e2:20c:29ff:fee6:2999/64 scope global dynamic valid_lft 7061sec preferred_lft 1661sec inet6 fe80::20c:29ff:fee6:2999/64 scope link valid_lft forever preferred_lft forever 3: pan0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN link/ether 1a:54:78:d1:47:aa brd ff:ff:ff:ff:ff:ff [root@Legion100 ~]#

[root@Legion101 ~]# netstat -tnlp | grep 3306 tcp 0 0 :::3306 :::* LISTEN 78683/mysqld [root@Legion101 ~]# df -hP Filesystem Size Used Avail Use% Mounted on /dev/mapper/vgzhongH-root 30G 7.1G 21G 26% / tmpfs 932M 22M 910M 3% /dev/shm /dev/sda1 477M 34M 418M 8% /boot /dev/drbd0 4.9G 120M 4.5G 3% /data [root@Legion101 ~]# ip addr 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:14:0f:ea brd ff:ff:ff:ff:ff:ff inet 172.16.6.101/16 brd 172.16.255.255 scope global eth0 inet 172.16.7.200/16 brd 172.16.255.255 scope global secondary eth0 inet6 2001:470:24:9e2:20c:29ff:fe14:fea/64 scope global dynamic valid_lft 7012sec preferred_lft 1612sec inet6 fe80::20c:29ff:fe14:fea/64 scope link valid_lft forever preferred_lft forever 3: pan0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN link/ether f6:6d:0c:e5:58:d5 brd ff:ff:ff:ff:ff:ff [root@Legion101 ~]#

9、現在來測試down vip

[root@Legion101 ~]# ip addr 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:14:0f:ea brd ff:ff:ff:ff:ff:ff inet 172.16.6.101/16 brd 172.16.255.255 scope global eth0 inet 172.16.7.200/16 brd 172.16.255.255 scope global secondary eth0 inet6 2001:470:24:9e2:20c:29ff:fe14:fea/64 scope global dynamic valid_lft 6880sec preferred_lft 1480sec inet6 fe80::20c:29ff:fe14:fea/64 scope link valid_lft forever preferred_lft forever 3: pan0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN link/ether f6:6d:0c:e5:58:d5 brd ff:ff:ff:ff:ff:ff [root@Legion101 ~]# ip addr del 172.16.7.200/16 dev eth0 [root@Legion101 ~]# ip addr 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:14:0f:ea brd ff:ff:ff:ff:ff:ff inet 172.16.6.101/16 brd 172.16.255.255 scope global eth0 inet6 2001:470:24:9e2:20c:29ff:fe14:fea/64 scope global dynamic valid_lft 6797sec preferred_lft 1397sec inet6 fe80::20c:29ff:fe14:fea/64 scope link valid_lft forever preferred_lft forever 3: pan0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN link/ether f6:6d:0c:e5:58:d5 brd ff:ff:ff:ff:ff:ff [root@Legion101 ~]# ip addr 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:14:0f:ea brd ff:ff:ff:ff:ff:ff inet 172.16.6.101/16 brd 172.16.255.255 scope global eth0 inet 172.16.7.200/16 brd 172.16.255.255 scope global secondary eth0 inet6 2001:470:24:9e2:20c:29ff:fe14:fea/64 scope global dynamic valid_lft 6793sec preferred_lft 1393sec inet6 fe80::20c:29ff:fe14:fea/64 scope link valid_lft forever preferred_lft forever 3: pan0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN link/ether f6:6d:0c:e5:58:d5 brd ff:ff:ff:ff:ff:ff [root@Legion101 ~]#

有次可見 vip也能重新啟動

mysql教程排行

程序編程推薦

相關文章

- MySql修改密碼後phpMyAdmin無法登陸的解決方法

- MySQL 5.7.16 修改密碼提示 ERROR 1054 (42S22): …

- mysql中DEFAULT_STORAGE_ENGINE:選擇正確的MySQL存…

- mysql中INNODB_BUFFER_POOL_SIZE:設置最佳內存值

- INNODB_LOG_FILE_SIZE:設置MySQL重做日志大小

- windows服務器mysql Out of memory (Needed 81…

- mysql報錯Plugin InnoDB registration as a S…

- mysql報錯:Lost connection to MySQL server …

- windows下備份mysql數據庫dos腳本

- mysql提示The server quit without updating …

- linux中Unable to find image ‘xxx’ locally…